Real World Hadoop – Hands on Enterprise Distributed Storage.

Master the art of manipulating files within a distributed storage enterprise platform. It’s easier than you think!

Course Access

You can access all the Big Data / Spark courses for one low monthly fee. Currently the membership site houses courses that covers deploying Hadoop with Cloudera and Hortonworks as well as installing and working with Spark 2.0.

- POC-d membership site : POC-D Membership site

This course can be purchased from

The Hadoop Distributed File System (HDFS) is a distributed file system designed to run on commodity hardware. It has many similarities with existing distributed file systems.

We will be manipulating the HDFS File System, however why are Enterprises interested in HDFS to begin with?

However, the differences from other distributed file systems are significant.

HDFS is highly fault-tolerant and is designed to be deployed on low-cost hardware.

HDFS provides high throughput access to application data and is suitable for applications that have large data sets.

HDFS relaxes a few POSIX requirements to enable streaming access to file system data.

HDFS is part of the Apache Hadoop Core project.

Hardware failure is the norm rather than the exception. An HDFS instance may consist of hundreds or thousands of server machines, each storing part of the file system’s data. The fact that there are a huge number of components and that each component has a non-trivial probability of failure means that some component of HDFS is always non-functional. Therefore, detection of faults and quick, automatic recovery from them is a core architectural goal of HDFS.

Applications that run on HDFS have large data sets. A typical file in HDFS is gigabytes to terabytes in size. Thus, HDFS is tuned to support large files. It should provide high aggregate data bandwidth and scale to hundreds of nodes in a single cluster. It should support tens of millions of files in a single instance.

A computation requested by an application is much more efficient if it is executed near the data it operates on. This is especially true when the size of the data set is huge. This minimizes network congestion and increases the overall throughput of the system. The assumption is that

it is often better to migrate the computation closer to where the data is located rather than moving the data to where the application is running. HDFS provides interfaces for applications to move themselves closer to where the data is located.

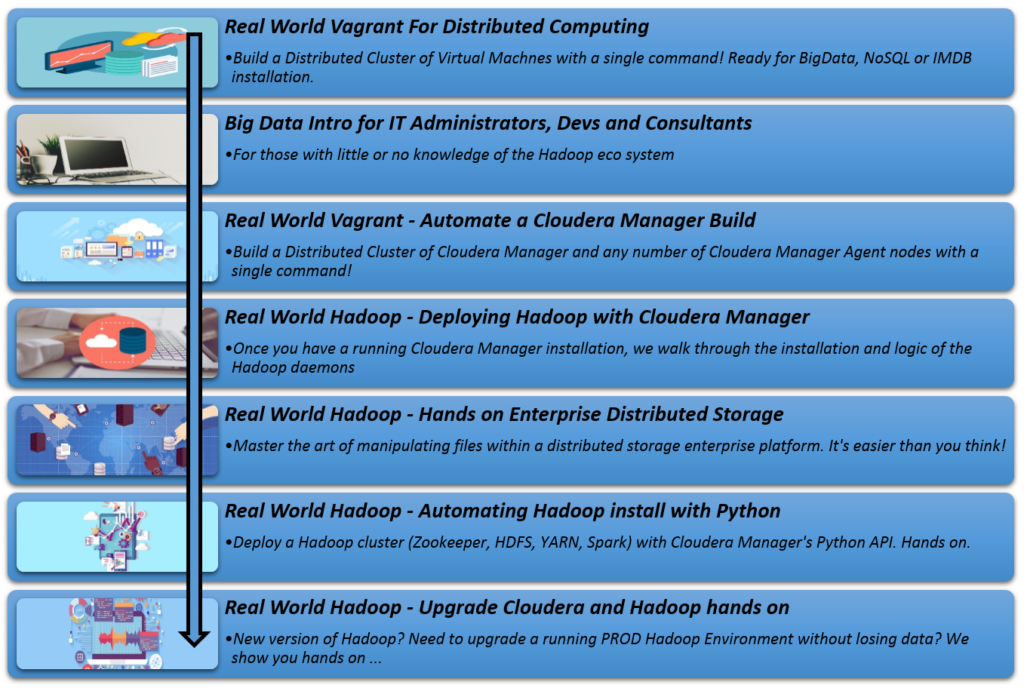

Recommended Cloudera Manager curriculum path. If you already have Cloudera Manager installed, you do not need to access the first three courses