Big Data Intro for IT Administrators, Devs and Consultants

Grasp why “Big Data” knowledge is in hot demand for Developers / Consultants and Admins

Course Access

You can access all the Big Data / Spark courses for one low monthly fee. Currently the membership site houses courses that covers deploying Hadoop with Cloudera and Hortonworks as well as installing and working with Spark 2.0.

- POC-d membership site : POC-D Membership site

This course can be purchased from

Understand “Big Data” and grasp why, if you are a Developer, Database Administrator, Software Architect or a IT Consultant, why you should be looking at this technology stack

There are more job opportunities in Big Data management and Analytics than there were last year and many IT professionals are prepared to invest time and money for the training.

Why Is Big Data Different?

In the old days… you know… a few years ago, we would utilize systems to extract, transform and load data (ETL) into giant data warehouses that had business intelligence solutions built over them for reporting. Periodically, all the systems would backup and combine the data into a database where reports could be run and everyone could get insight into what was going on.

The problem was that the database technology simply couldn’t handle multiple, continuous streams of data. It couldn’t handle the volume of data. It couldn’t modify the incoming data in real-time. And reporting tools were lacking that couldn’t handle anything but a relational query on the back-end. Big Data solutions offer cloud hosting, highly indexed and optimized data structures, automatic archival and extraction capabilities, and reporting interfaces have been designed to provide more accurate analyses that enable businesses to make better decisions.

Better business decisions means that companies can reduce the risk of their decisions, and make better decisions that reduce costs and increase marketing and sales effectiveness.

What Are the Benefits of Big Data?

This infographic from Informatica walks through the risks and opportunities associated with leveraging big data in corporations.

Big Data is Timely – A large percentage of each workday, knowledge workers spend attempting to find and manage data.

Big Data is Accessible – Senior executives report that accessing the right data is difficult.

Big Data is Holistic – Information is currently kept in silos within the organization. Marketing data, for example, might be found in web analytics, mobile analytics, social analytics, CRMs, A/B Testing tools, email marketing systems, and more… each with focus on its silo.

Big Data is Trustworthy – Organizations measure the monetary cost of poor data quality. Things as simple as monitoring multiple systems for customer contact information updates can save millions of dollars.

Big Data is Relevant – Organizations are dissatisfied with their tools ability to filter out irrelevant data. Something as simple as filtering customers from your web analytics can provide a ton of insight into your acquisition efforts.

Big Data is Authoritive – Organizations struggle with multiple versions of the truth depending on the source of their data. By combining multiple, vetted sources, more companies can produce highly accurate intelligence sources.

Big Data is Actionable – Outdated or bad data results in organizations making bad decisions that can cost billions.

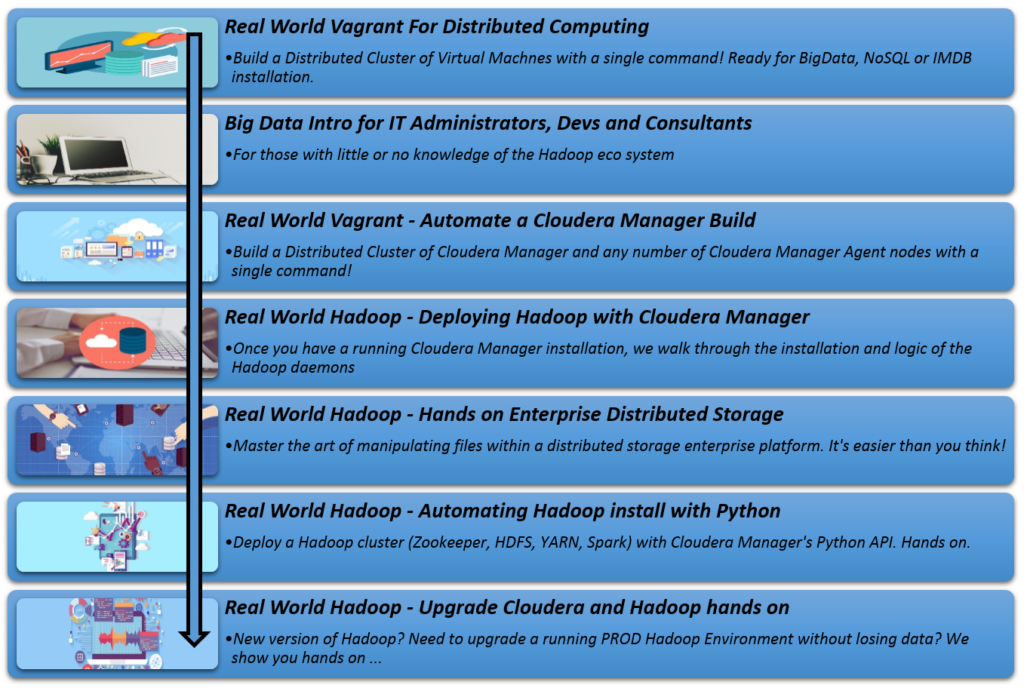

Recommended Cloudera Manager curriculum path. If you already have Cloudera Manager installed, you do not need to access the first three courses