Real World Vagrant – Build an Apache Spark Development Env!

With a single command, build an IDE, Scala and Spark (1.6.2 or 2.0.1) Development Environment! Run in under 3 minutes!!

Course Access

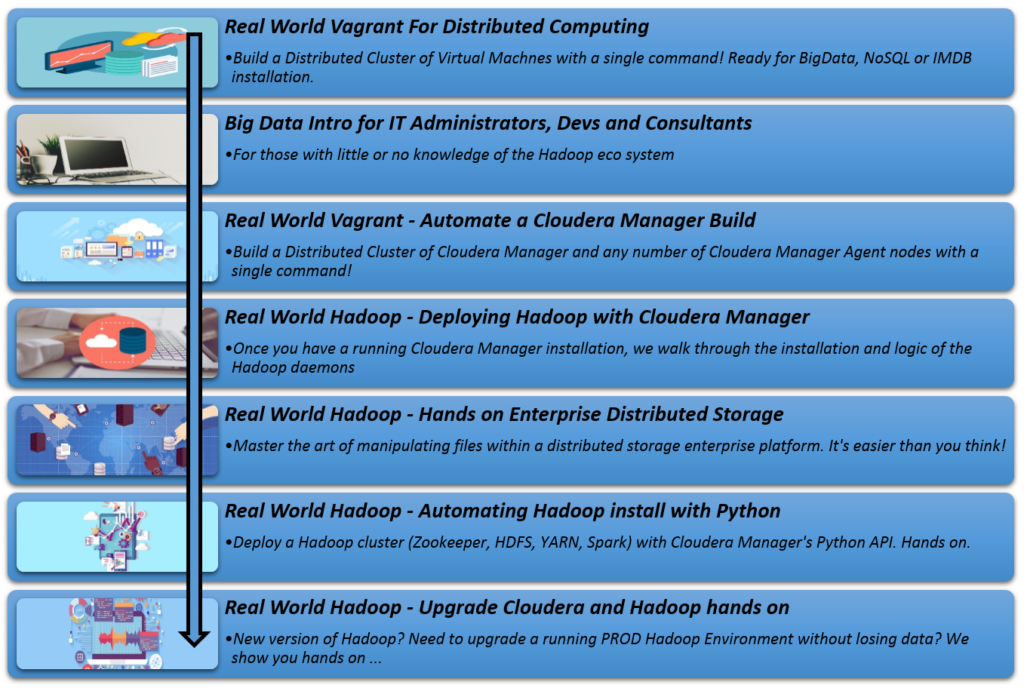

You can access all the Big Data / Spark courses for one low monthly fee. Currently the membership site houses courses that covers deploying Hadoop with Cloudera and Hortonworks as well as installing and working with Spark 2.0.

- POC-d membership site : POC-D Membership site

This course can be purchased from

Note : This course is built on top of the “Real World Vagrant For Distributed Computing – Toyin Akin” course

This course enables you to package a complete Spark Development environment into your own custom 2.3GB vagrant box.

Once built you no longer need to manipulate your Windows machine in order to get a fully fledged Spark environment to work. With the final solution, you can boot up a complete Apache Spark environment in under 3 minutes!!

Install any version of Spark you prefer. We have codified for 1.6.2 or 2.0.1. but it’s pretty easy to extend this for a new version.

Why Apache Spark …

Apache Spark run programs up to 100x faster than Hadoop MapReduce in memory, or 10x faster on disk.

Apache Spark has an advanced DAG execution engine that supports cyclic data flow and in-memory computing.

Apache Spark offers over 80 high-level operators that make it easy to build parallel apps. And you can use it interactively from the Scala, Python and R shells.

Apache Spark can combine SQL, streaming, and complex analytics.

Apache Spark powers a stack of libraries including SQL and DataFrames, MLlib for machine learning, GraphX, and Spark Streaming. You can combine these libraries seamlessly in the same application.

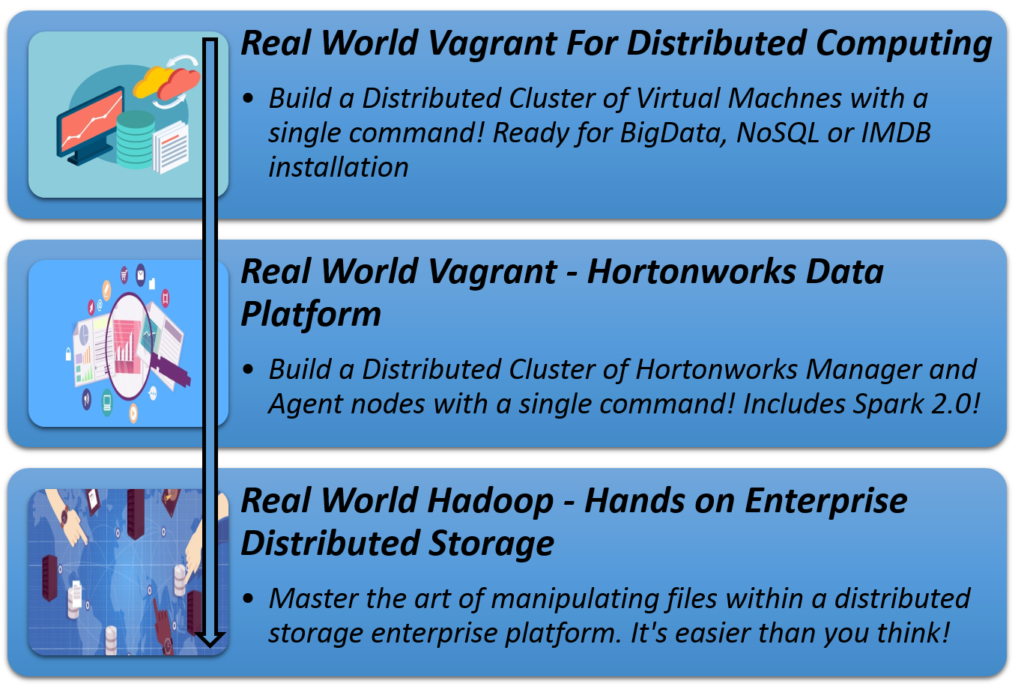

Recommended Spark course path. If you already have spark installed, you do not need to access the first three courses